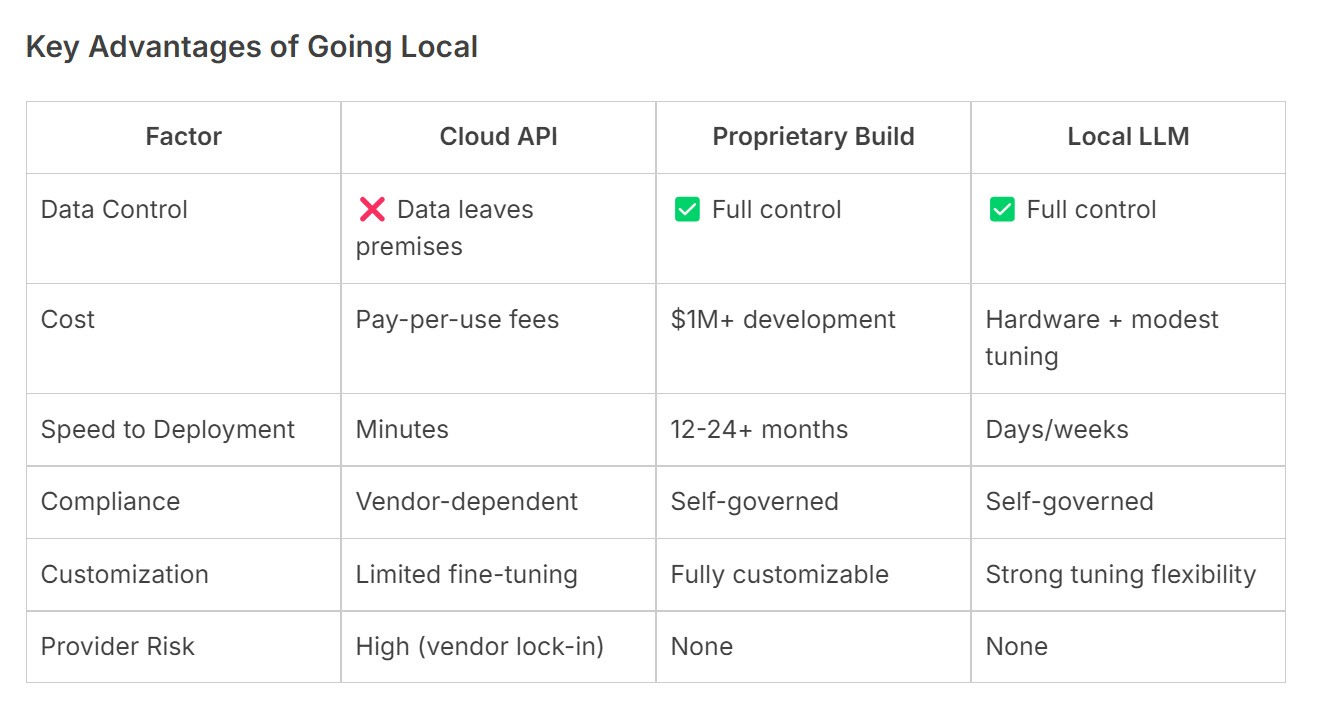

As enterprises race to leverage generative AI, the debate often centers on two paths:

- Cloud-based APIs (e.g., OpenAI, Anthropic)

- Building proprietary models from scratch

Traditional cloud data centersServed as the bedrock of computing infrastructure for over a decade, catering to a diverse range of users and applications. However, data centers have evolved in recent years to keep up with advancements in technology and the surging demand for AI-driven computing. Two distinct classes of data centers are currently emerging: AI factories and AI clouds. Both of these are tailored to meet the unique demands of AI workloads, which are characterized by their reliance on accelerated computing.

But a critical third option is also gaining traction:

Locally-hosted open-source LLMs – a solution tailored for organizations prioritizing data sovereignty, compliance, and distrust of external AI providers.

Why Local LLMs? Addressing the Unspoken Concern

While cloud APIs offer convenience and cutting-edge capabilities, they raise two fundamental issues:

- Proprietary Data Risk: Sensitive data (e.g., healthcare records, legal documents, R&D) leaves your infrastructure.

- Provider Dependence: You entrust a third party with security, compliance, and pricing – often the AI vendor itself is the concern due to data usage policies or regulatory exposure.

Building custom models solves this but demands massive resources ($millions, ML expertise, and years of development).

Enter Local LLMs: Deploy curated open-source models (e.g., Llama 3, Mistral, Command R+) entirely within your infrastructure. This hybrid approach balances security, control, and practicality. AdaptAI is an an example of a local LLM available today.It’s developed from open source components and can be installed, trained, and operatied on an organizations servers with an RTX 3090 or greater GPU.

Ideal Use Cases for Local LLMs

- Confidential Industries

- Legal, healthcare, or finance firms handling sensitive client data.

- Example: A hospital diagnoses patients using an on-premise Llama 3 instance – PHI never exposed.

- IP-Sensitive R&D

- Manufacturing, pharma, or tech companies protecting trade secrets.

- Example: Analyzing patent drafts internally without leaking to a cloud provider.

- Regulatory Compliance

- GDPR, HIPAA, or sovereign data laws requiring data residency.

- Example: A EU bank processes customer queries locally to meet GDPR mandates.

- Distrust of AI Vendors

- Avoiding vendor data usage policies, audits, or future pricing shifts.

Implementing Local LLMs: Practical Steps

- Hardware: Run quantized 7B-70B parameter models on enterprise GPUs (e.g., NVIDIA A100s) or even high-end CPUs.

- Tools: Leverage Ollama, vLLM, or Hugging Face’s Text Generation Inference for deployment.

- Customization: Fine-tune base models (e.g., with LoRA) using internal data without external exposure.

The Bottom Line

Local LLMs unlock AI’s potential for high-stakes environments where data privacy and provider independence are non-negotiable. They offer a pragmatic middle ground – delivering control without the complexity of building from scratch. As open-source models rapidly close the performance gap with commercial offerings, this third path isn’t just viable: it’s becoming strategic.